Accelerate Security with Threat Stack Telemetry

Threat Stack is now F5 Distributed Cloud App Infrastructure Protection (AIP). Start using Distributed Cloud AIP with your team today.

There’s a lot of value in Threat Stack’s deep, continuous telemetry. By casting a wide net, our customers get the complete story about what’s happening in their cloud environments, with comprehensive security data. Of course they’re not poring over this data all of the time. But when they really need it most — after a breach or during a compliance fire drill — our detailed telemetry enables faster forensic investigations and easier generation of artifacts for auditors.

What does this telemetry look like, though, and what future use cases we are designing it for? In this article, I plan to answer the first part of that question, and leave you with a few hints about where we’re headed with the second part.

The following example simulates a common attacker technique: Using the EC2 instance metadata service to gather intel and dig deeper in the environment. As a first step, our example assumes the attacker has already stolen SSH keys. Here, we’ll look at what happens next.

Our investigation & Threat Stack telemetry

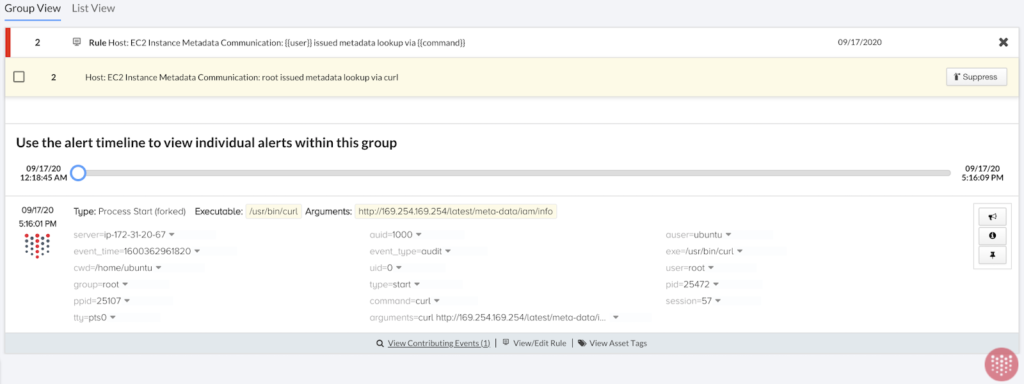

Our investigation begins with a high-severity alert in Threat Stack, indicating suspicious EC2 Instance Metadata Communication.

The information button exposes all of the telemetry associated with the alert:

As an aside: There are many ways users can customize alerting to their own environments. In fact, many of the downstream behaviors we’ll observe here could be good candidates for alerting. But going forward, I’m going to focus on the raw telemetry that Threat Stack records.

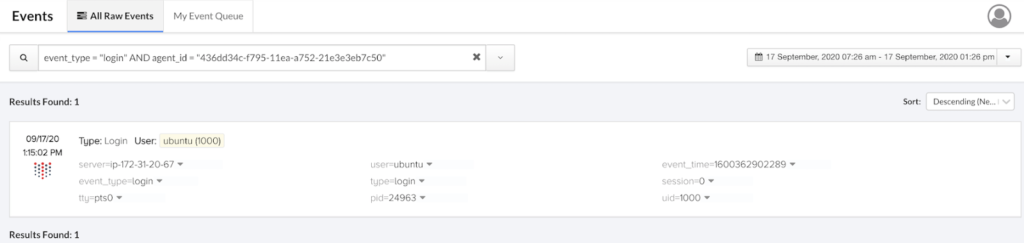

We can pivot from this alert to Threat Stack’s Event Search feature, which provides a full window into the past three days of activity in your environment. Here, we can use the agent_id to focus on the EC2 instance that triggered the alert and look for SSH login activity.

I’m restricting the timeframe via the UI, and here’s the Threat Stack Event Search query I’m using:

event_type = "login" AND agent_id = "436dd34c-f795-11ea-a752-21e3e3eb7c50"

Much like alerts, Threat Stack events allow you to see the full underlying telemetry:

Now that we’ve isolated the login event, we can grab the timestamp to further narrow the search window.

Here’s how you can inspect an even smaller window of time via a query (big thanks to Threat Stack Lead Security Analyst Ethan Hansen for describing these techniques in “How to Track Agent-Based User Activity”):

agent_id = "436dd34c-f795-11ea-a752-21e3e3eb7c50" AND event_time >= 1600362902289 AND event_time <= 1600362992764

We’re looking for user actions immediately post-login, and see that the user “ubuntu” — an AWS default for Ubuntu AMIs — went ahead and elevated their permissions to “root”. Next, we’ll expand the time window slightly and continue retracing the attacker’s steps.

A lot happens here. First, we see the attacker look for credential files on the server. While the Linux command executed successfully, the absence of corresponding file OPEN events tells us that the credentials file doesn’t exist. (Threat Stack observes full CRUD operations for FIM events.) The default credentials file doesn’t exist because we’re using IAM roles on our EC2 instances. In general this is a best practice, but later we’ll see how poor hygiene with IAM roles for EC2 can benefit an attacker.

The attacker recognizes there must be a role attached to this instance, and uses curl https://169.254.169.254/latest/meta-data/iam/info to see what they’re working with. The role name must have looked promising, because their next step is to install the AWS Command Line Interface (awscli).

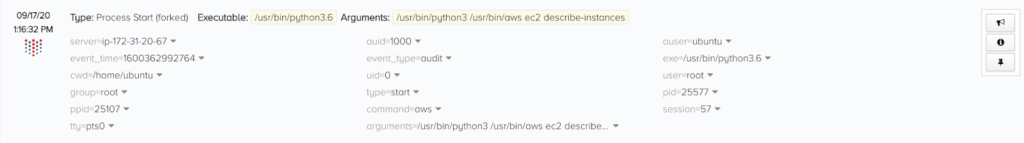

Continuing our investigation, we notice they issue an aws ec2 describe-instances command:

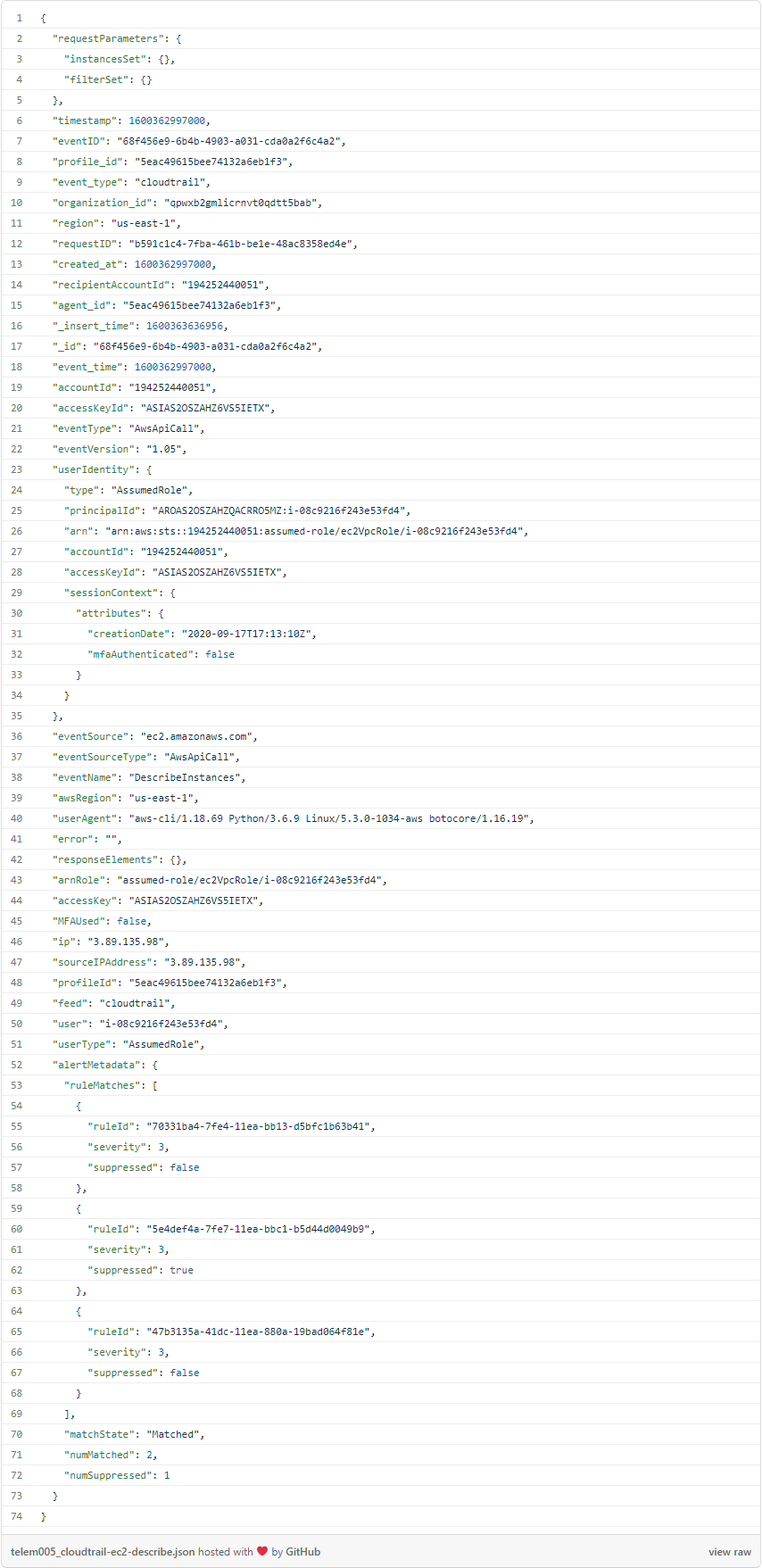

We can see if the role worked by checking the full AWS CloudTrail event stream that Threat Stack ingests:

The bones of this query are:

event_type = "cloudtrail" AND userType = "AssumedRole"

By noting that “arnRole” is set to assumed-role/ec2VpcRole/i-08c9216f243e53fd4, we can confirm that this EC2 instance (instance ID i-08c9216f243e53fd4) was successful. The attacker has now gathered intel about the other EC2 instances in the environment, including what roles and associated SSH keys they have.

We continue our investigation, looking for login events, and we find a suspicious candidate:

Here’s the basic query:

event_type = "login" AND event_time > 1600362961

I’ll grab the “agent_id” value here to use in the next query, along with the same timestamp.

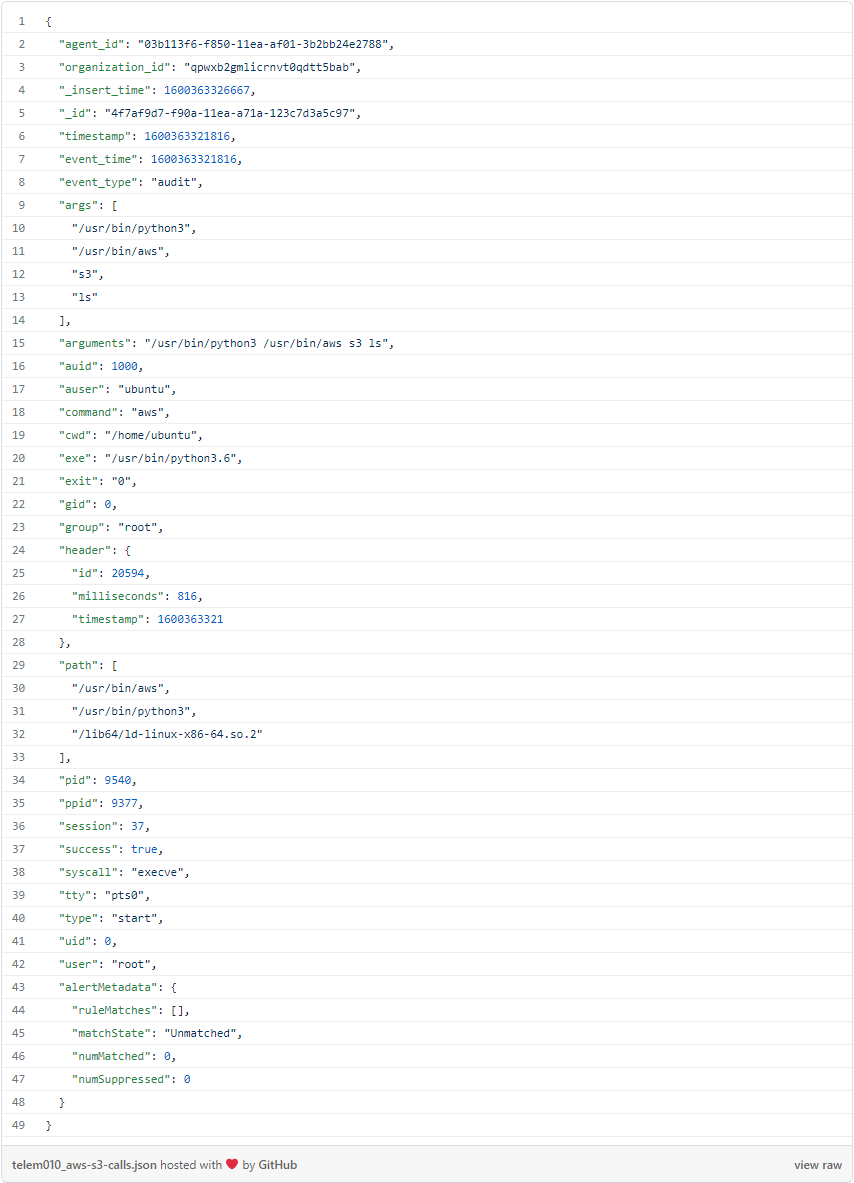

For brevity, I’ll omit the following events, but here we observe the same behavior: Elevating permissions to root and installing the awscli. Next, they skip the call to the metadata service and use the awscli to poke around S3:

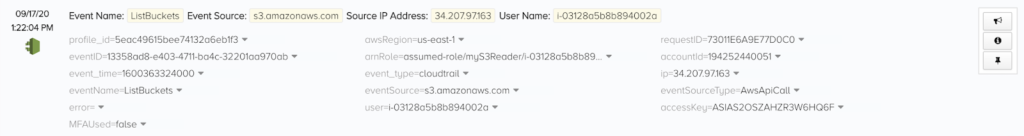

They begin by attempting to list all the buckets the role can access in S3. We can head over to the CloudTrail events in Threat Stack to confirm if the instance was able to assume the role:

Here, we can observe an arnRole value of assumed-role/myS3Reader/i-03128a5b8b894002a, which confirms that the EC2 instance is able to access S3. It’s worth noting that I have followed AWS’s recommendation to “Block all public access” to my S3 buckets — but since this instance is on a private VPC, that setting won’t protect us if the EC2 instance can use the proper IAM role.

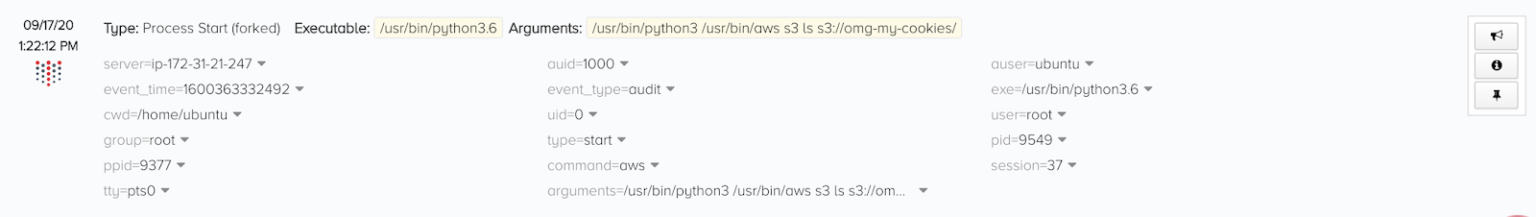

We can observe subsequent aws s3 commands to inspect various buckets (omitted for brevity), but the attacker eventually finds their target:

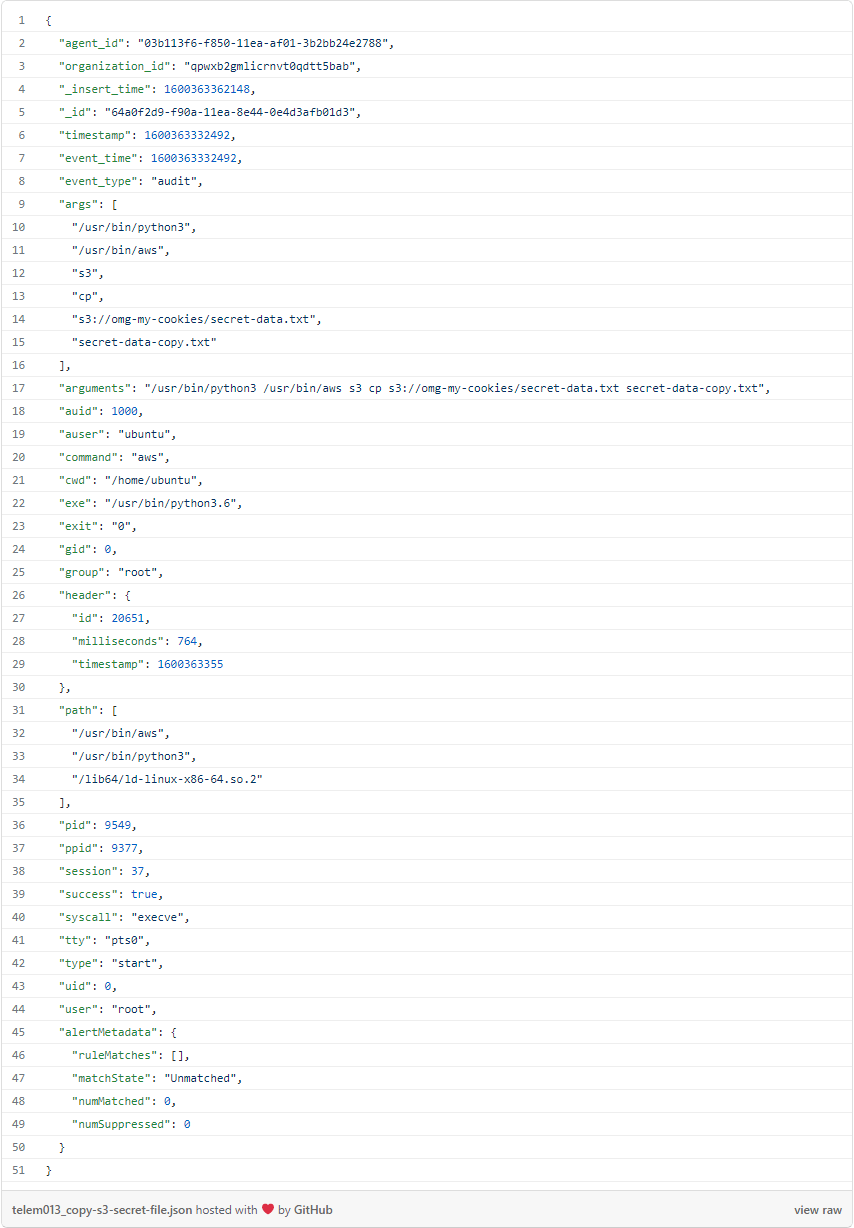

Now, the cat is out of the bag by copying some secret data: aws s3 cp s3://omg-my-cookies/secret-data.txt secret-data-copy.txt

Future anomaly detection: Full-stack telemetry with ML-based insights

Threat Stack continuously collects telemetry across runtime systems. In this example, we only used SSH login events, Linux Audit events and AWS CloudTrail events, but Threat Stack extends this visibility across the full cloud stack to containers, Kubernetes, Windows servers, AWS Fargate, and more.

The detailed event data that Threat Stack persists lets security analysts skip log collection and ingestion and lets them get right to work on forensic investigations — normally more involved than the simplified example that we simulated here. But we’re also looking for new ways to leverage this data with machine learning, because feeding rich data into our models means better anomaly detection for customers.

To learn more about how Threat Stack’s forthcoming ML capabilities will support a wide range of security and compliance use cases, check out “Optimizing Threat Stack’s Data Pipeline with Apache Spark and Amazon EMR” by Threat Stack Software Engineer Mina Botros. And, stay tuned for additional details regarding Threat Stack’s imminent ML technology release, coming soon!

Threat Stack is now F5 Distributed Cloud App Infrastructure Protection (AIP). Start using Distributed Cloud AIP with your team today.