QUIC Will Eat the Internet

QUIC (not an acronym) is a unique beast, but is best thought of as a new transport protocol that solves many longstanding problems in the internet and captures most of the value provided by TCP, TLS, SCTP, IPSec, and HTTP/2. There is a new version of HTTP called HTTP/3 that is designed to work over UDP/QUIC instead of TCP/TLS.

Google deployed an early version of HTTP over QUIC in its browsers and web servers as early as 2014. An IETF standardization process began in 2016. After much evolution and data gathering, this will result in the first batch of RFCs in early 2021. Several major internet companies, including F5, have either shipped products that use QUIC or have deployed QUIC in their infrastructure. As of October 2020, 75% of Facebook’s traffic was over HTTP/3 and QUIC.

These early deployments, and fervor for new standards on top of QUIC in the IETF, indicate that this will become an important, and possibly dominant, substrate for cutting-edge applications over the internet.

Technical overview—Why QUIC?

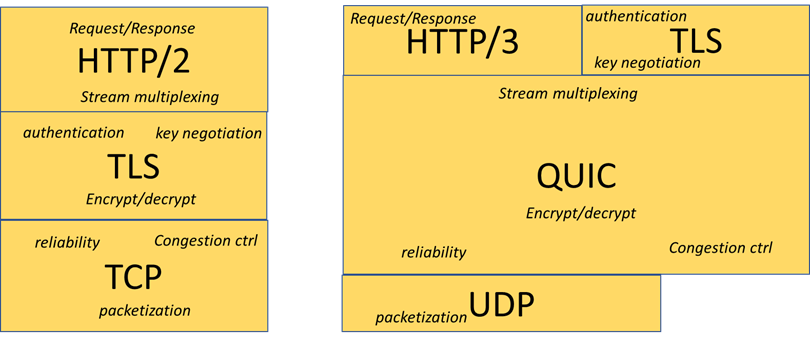

Figure 1 gives the reader an idea of what QUIC does. However, this functional decomposition doesn’t clarify why early adopters in industry are moving to QUIC:

- Lower Latency. Web-like data transfers are dominated by the latency of three layers of handshakes: one for TCP, at least one for TLS, and one for HTTP request/response. Recent developments in TCP/TLS can in principle collapse this to one round trip, though this rarely works in practice. QUIC will reduce this to one round trip, and at most two.

- Streams. Like HTTP/2, QUIC provides the application with multiple byte streams to increase the independence of conceptually distinct data delivered over the same connection. Doing it in the transport only increases this independence. Streams naturally fit the needs of streaming video delivery and major internet content providers are well on their way to delivering streaming over QUIC.

- Better Loss Response. QUIC’s design improves on TCP’s ability to detect and recover from packet losses. Moreover, by presenting multiplexed streams instead of a single in-order byte stream, loss of a packet containing one object need not delay delivery of a different object.

- Multihoming. Much like MPTCP and SCTP, QUIC connections can be associated with multiple IP addresses for each endpoint. Unlike those protocols, QUIC has a good chance of making it across an internet that drops unfamiliar protocol numbers and TCP header options.

- Security and Privacy. QUIC applies encryption at the transport layer, instead of above it. The entire UDP payload is authenticated, preventing any transparent modification by intermediaries, and almost all transport information is encrypted. The ramifications of this are a white paper in itself. Suffice it to say that this substantially improves the privacy properties, and virtually eliminates the attack surface that TCP provides. Unlike IPSec, this is running today at web scale. It also leads to:

- Extensibility. TCP is hard to change because its creators left limited extension space, and because middleboxes enforce old TCP behavior. QUIC combines explicit versioning with opaqueness to middleboxes to allow further innovation in the transport. This will allow support for future use cases and eventually improvement on bulk transport capacity compared to TCP.

There are two reasons, besides inertia, why some applications may not move to QUIC:

- Complexity: applications that only need a single bytestream and don’t care about encryption don’t need the additional workload associated with these features.

- Middleboxes: A non-negligible percentage of internet paths don’t admit UDP. HTTP/3 has been designed with TCP fallback for these paths, but ultimately the performance impact on major websites (Google, Facebook, etc) is likely to obsolete the responsible devices, except where nation-states desiring surveillance mandate otherwise.

It is eating the Internet

Google Chrome, Google App Clients, and Facebook’s app already support HTTP/3. Safari, Edge, and Firefox implementations support it, but (for now) off by default.

On the server side, implementations and/or deployments by Google, Microsoft, Facebook, Apple, Cloudflare, LiteSpeed, F5 BIG-IP, NGINX, Fastly, and Akamai either have shipped, or are close to it. Recently, a leading European ISP reported 20% of their packets were QUIC. About 5% of websites use HTTP/3 in February 2021, but we expect this to grow once the RFC is published.

Moreover, there is much work in standards organizations to bring QUIC beyond the HTTP use case. Draft standards at IETF propose DNS, Websockets, SIP, and both TCP and UDP tunnels over QUIC. QUIC was a little too late to be fully incorporated into 5G architectures, but the interest and applicability of QUIC to service providers is clear. Finally, the upper APIs for HTTP/2 and HTTP/3 are quite similar, so any protocol or application that runs on top of HTTP/2 can be easily ported to run over HTTP/3 and QUIC instead of TCP.

Threats & Opportunities

QUIC & HTTP/3 will shape a variety of markets. High-performing websites and applications have a strong incentive to switch to HTTP/3 and QUIC once the ecosystem fully matures, and we expect our customers to demand HTTP/3 support at a similar tempo to their deployment of HTTP/2.

Security Products need a fundamental reevaluation in a QUIC-denominated internet. Packet inspection is much more difficult without access to TLS session keys, which is usually only possible with possession of the domain’s private key. This enhances the value of a security solution integrated with an application-level proxy, rather than a series of single-function devices.

Moreover, traditional DDoS defense needs a tune-up. Not only is packet identification and inspection harder, but TCP syncookies have been replaced with “Retry packets,” which cannot be easily spoofed by intermediaries. There are standard ways to coordinate that allow servers to offload Retry Packet generation and validation, but again, that requires development effort.

Traditional Layer 4 Load Balancing will break QUIC Address migration, as it can’t associate a flow that changes its address or ports to itself, and then route to the same server. Again, there are standards to coordinate and overcome this problem, but it requires investment.

The IETF’s MASQUE working group is working on generalized traffic tunneling over QUIC, which could be the basis of next-generation VPN schemes. The properties of QUIC are much better for multiplexing arbitrary flows than TLS over TCP. These tunnels can also replace IPSec tunnels with webscale cryptography, improving some service provider use cases, and even provide a way to optimize QUIC connections for mobile link types with explicit client consent.

QUIC will require new network measurement and analysis tools. Systems that could measure TCP latency and loss cannot use these signals, but there is an explicit latency signal to the network, and other signals may be on the way. With more transport information hidden behind encryption, packet captures are less useful. However, there is an emerging standard logging format many QUIC implementations follow, that people are building analysis tools to leverage.

F5 is Following Developments Closely

F5 staff have been monitoring standards efforts in the IETF for years and contributing where we think it will help our customers. BIG-IP has been tracking draft releases of QUIC and HTTP/3 from the beginning, including public releases since TMOS v15.1.0.1. NGINX has an HTTP/3 alpha release, and will merge it into the mainline shortly.

We will continue to watch trends in this area and build new and exciting products to reflect the new capabilities that these protocols deliver.

Conclusion

QUIC has broad industry support and the potential to be the basis of most applications that deliver business value over the internet. Anyone delivering applications over the internet should start thinking about how their operations should change to reflect the new threats and opportunities that these protocols bring.